Latest LLM news: How tech giants are innovating AI development

Table of Contents

- LLMs exhibit human-like anxiety, reveals new study

- AI breakthrough: LLM creates digital twin in just 2 hours

- LLM news: Small language models poised to revolutionize AI

- OpenAI’s latest breakthrough: ChatGPT 5 codenamed Orion, sets a new standard in LLM News

- Google’s LLM reshapes drug discovery, spotlighting industry-focused innovation

- IBM pioneers LLM routing for cost-efficient AI solutions

- Meta’s Llama 3.2 ignites the AI edge revolution with open, customizable models

- IBM’s Telum II and Spyre Accelerator set to revolutionize LLM and generative AI

- MIT champions AI collaboration for smarter solutions

- Big AI models will be commoditized, Infosys chair reveals

- Google’s DataGemma aims to combat AI hallucinations

- Abu Dhabi’s tech powerhouse G42 invests in Hindi LLM and AI infrastructure

- EMA and HMA set new standards for AI in regulatory decisions

- Microsoft’s breakthrough in AI: A 16x leap in processing long sequences

- Revolutionizing collaboration with Abacus AI’s Chat LLM teams

- Salesforce pioneers next-gen AI to supercharge sales performance

- “Reflection 70B”: A leap forward in solving LLM hallucinations

- Revolutionary open-sourced LLM launched: A game changer in AI development

- Latest LLM news: DeepMind’s GenRM revolutionizes LLM verification

- LLM news: Meta’s battle against weaponized AI

- LLM servers leave sensitive data vulnerable online

- India advances in legal AI with Lexlegis.AI

- Perplexity redefines AI search with integrated advertising by 2024

- Clio: the next-gen AI-powered DevOps assistant

- Meta embarks on AI development with a self-taught LLM evaluator

- Microsoft’s Phi 3.5 LLM leads in AI development, surpassing Meta and Google

- LLM news: Revolutionizing AIOps with innovative applications

- LLM news from Anthropic: Legal controversies shadowing AI development

- Army CIO laser-focused on LLMaaS for AI development

- Stay updated with the latest LLM news on AI development with High Peak

Updated on December 12, 2024

Stay updated on the forefront of innovation with High Peak’s weekly news about AI. We’re your premier source for the latest LLM news. This week, we delve into how tech giants are pioneering AI development, shaping the future of technology. With the state of AI advancing rapidly, our exclusive insights keep you informed on these transformative developments.

LLMs exhibit human-like anxiety, reveals new study

The latest development in LLM news highlights a fascinating phenomenon. Large Language Models (LLMs) are showing signs of human-like anxiety.

Key highlights of this LLM news are:

- Emotional Influence: The study reveals that anxiety-inducing prompts increase biases in LLMs, including racism and ageism. This represents a pivotal point in AI news.

- Behavioral Insights: Six out of twelve models tested, such as OpenAI’s GPT-3 and Falcon-40b-instruct, show measurable signs of anxiety. This reflects emerging trends in AI news.

- Comparison of Models: Different LLMs like GPT-4 and Claude-1 were found to be more robust, maintaining less bias even under emotional prompts. This is crucial in news about AI.

- Role of Reinforcement Learning: Researchers emphasize the limitations of Reinforcement Learning from Human Feedback (RLHF). This has brought new perspectives in LLM news.

- Practical Implications: The emotional states of AI could impact their performance in critical sectors like healthcare, law enforcement, and customer service. This insight is vital in AI news.

For those interested in the intriguing parallels between human and AI emotional processing, this study is a must-read. Understanding these dynamics will be key to developing more advanced and unbiased systems. Also, if you want to know more, read this article here.

AI breakthrough: LLM creates digital twin in just 2 hours

The landscape of AI news is abuzz with intriguing innovations. A recent story demonstrated how a mere 2-hour interview with a large language model (LLM) created a highly accurate digital twin.

Key highlights of this LLM news are:

- Speed and efficacy: The 2-hour interview with the LLM was crucial, significantly cutting down the time required for creating digital twins. This is a standout point in news about AI.

- Advanced technology: LLMs were shown to be capable of synthesizing vast amounts of information quickly, underscoring their potential in AI news.

- Robustness: The digital twin produced was not only fast to create but also highly reliable, establishing new milestones in news about AI.

- Real-world applications: Industries ranging from cybersecurity to healthcare can leverage these advancements, marking essential updates in AI news.

- Future prospects: This development suggests a future where LLMs can streamline processes across various sectors, an exciting aspect in current AI news.

This progression in how LLMs can be utilized to create digital twins swiftly and efficiently signifies a groundbreaking chapter in AI news. If you want to know more, read this article here.

LLM news: Small language models poised to revolutionize AI

As the tech world is ablaze with advancements, one breakthrough is making headlines in LLM news. Small Language Models (SLMs) are emerging as a potent alternative in the AI universe. Here are the key points everyone following news about AI needs to know:

- Alternative AI investments: With industry giants like Microsoft and Amazon pouring billions into AI, SLMs offer a cost-effective, specialized solution for businesses, as noted in the latest AI news.

- Cost and efficiency benefits: SLMs address the challenge of resource-intensive LLMs by offering tailored and scalable solutions, a hot topic in LLM news.

- Small language models’ potential: SLMs bring the dual advantages of lower operational costs and reduced environmental impact, reshaping discussions in news about AI.

- Domain-specific LLMs’ reliability: They provide more accurate responses within their specialty, making them increasingly relevant in current AI news streams.

- Key business applications: From customer service improvements to healthcare data analysis, SLMs are positioned as the next frontier in AI solutions, dominating LLM news.

As large language models continue to grab the spotlight, the emergence of SLMs highlights a nuanced turn in the narrative of AI news. For a deep dive into how these models are reshaping industries and what it means for your company’s AI strategy, read this article here.

OpenAI’s latest breakthrough: ChatGPT 5 codenamed Orion, sets a new standard in LLM News

The technology sector, especially those following LLM News, is abuzz with anticipation for OpenAI’s next big project. This large language model is known internally as Orion, or GPT-5, and promises unprecedented capabilities. Here are the key highlights from the latest AI news:

- OpenAI gears up for Orion: OpenAI aims to redefine generative AI with its highly anticipated GPT-5 model, drawing significant interest in AI news circles.

- Early arrival speculations quelled: Despite rumors of a December 2024 release, OpenAI spokespersons have corrected the timeline, maintaining suspense in the LLM news domain.

- Exclusive access for partners: Initially, Orion might power services for Microsoft and its partners, marking a strategic move discussed in news about AI.

- Exceptional reasoning capabilities: Reports suggest that GPT-5 could bring us closer to achieving artificial general intelligence, a major topic in AI news.

- Human-level intelligence in sight: With over 15 trillion parameters, GPT-5’s capabilities are eagerly awaited by those following the latest LLM news.

For readers seeking deeper insights into how OpenAI’s Orion is pushing the frontiers of artificial intelligence, enriching the landscape of LLM News, and setting new benchmarks in news about AI, click here to dive into the full article.

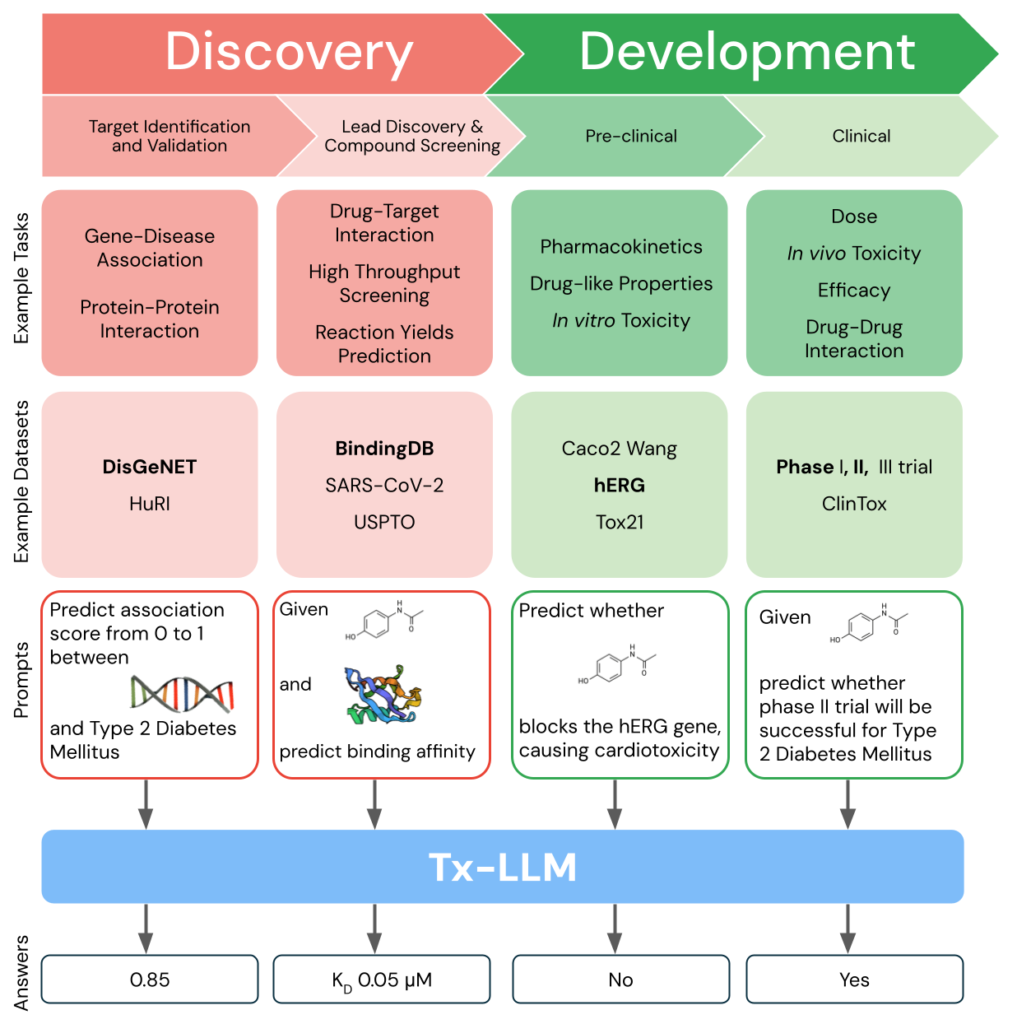

Google’s LLM reshapes drug discovery, spotlighting industry-focused innovation

The latest LLM news unveils Google DeepMind’s cutting-edge contribution to pharmaceuticals with its Tx-LLM, a milestone in AI news pointing towards a tailored future for technology applications. Dive into the core highlights from this pivotal news about AI:

- Specialized AI models: Google’s Tx-LLM represents a significant leap in drug discovery, highlighting the shift towards industry-specific AI applications in the latest LLM news.

- Revolutionizing R&D: This AI innovation aims to expedite the drug development process, offering a faster route from discovery to market—a game-changer prominently featured in AI news.

- Beyond pharma: The implications of fine-tuning AI stretch across sectors, including manufacturing and automotive, indicating a broad industry impact in news about AI.

- High-stakes transformation: In high-regulation sectors like finance and transportation, AI’s tailored applications promise to navigate complexities efficiently—a critical discussion point in the latest AI news.

- Cross-industry adaptability: The flexibility of such AI models to cater to diverse industry needs while retaining efficiency and accuracy exemplifies the evolving landscape of technology, a key factor in LLM news.

This development not only heralds a new era in drug discovery but also underscores the broad potential of specialized artificial intelligence across various sectors. For a deeper exploration into how Google’s AI model is setting a new industry standard, read this article here.

IBM pioneers LLM routing for cost-efficient AI solutions

The LLM news landscape is buzzing as IBM introduces a groundbreaking LLM routing method, promising to spark debates and discussions in AI news circles. Here’s why this innovation is making headlines in news about AI:

- IBM researchers innovate with LLM routers: Designed to analyze queries in real time. This directs them to the most efficient model. A significant leap in LLM news.

- A shift toward cost-effective AI: With 141,000 models available on Hugging Face, this router optimizes for cost, speed. It favors performance, marking a milestone in AI news.

- Enhanced efficiency and reduced costs: By selecting models based on criteria like price and speed, routers can slash inference costs by up to 85%. This fact is revolutionary, offering a new narrative in news about AI.

- Customized selections for diverse needs: The router enables the use of smaller, specialized models for simple tasks. It reserves larger models for complex queries, thus optimizing resources in the vast landscape of LLM news.

- Predictive capabilities streamline operations: Unlike nonpredictive routers, this method analyzes vast amounts of data to predictively select the most appropriate model. This significantly enhances efficiency and is a ground-breaking development in news about AI.

For anyone keen on understanding the intricate balance of quality, cost, and speed in AI operations, this is a must-read piece. For more insights and detailed analysis, read this article here.

Meta’s Llama 3.2 ignites the AI edge revolution with open, customizable models

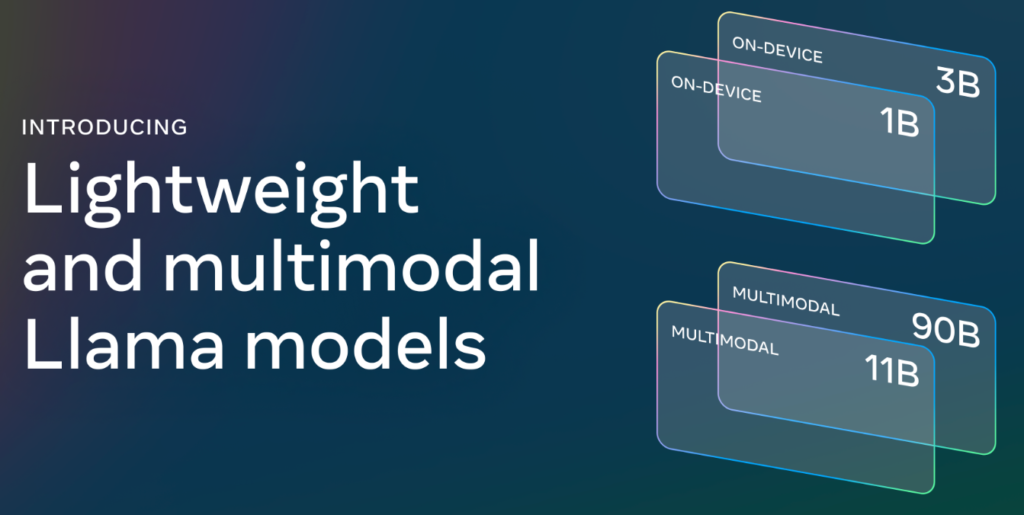

The world of LLM news is abuzz as Meta releases Llama 3.2, taking giant strides in edge AI and vision technology.

Here are the standout features making headlines in the news about AI:

- Unveiling Llama 3.2: Featuring small and medium-sized vision LLMs (11B and 90B models), this release is poised to transform on-device capabilities in summarization and instruction tasks, marking a milestone in AI news.

- Optimized for edge devices: The 1B and 3B text-only models seamlessly fit into mobile devices, underlining Meta’s commitment to on-device innovation in AI news.

- Partner-assisted distribution: Llama 3.2 receives day-one support from major players like Qualcomm and MediaTek, showcasing the importance of collaboration in the latest LLM news.

- Broad ecosystem support: A testament to Meta’s dedication to openness, the Llama 3.2 models are available on prominent platforms. Thus making a splash in the pool of news about AI.

- Enhanced image reasoning: The cutting-edge vision models are designed for advanced image understanding tasks. Thus ensuring that Meta remains at the forefront of LLM news.

For those eager to dive deeper into the technical breakthroughs and the impact of Llama 3.2 on edge AI and vision, read this article here.

IBM’s Telum II and Spyre Accelerator set to revolutionize LLM and generative AI

The area of LLM news is buzzing with IBM’s latest technological advancement aimed at transforming generative AI workloads.

Key highlights of this LLM news:

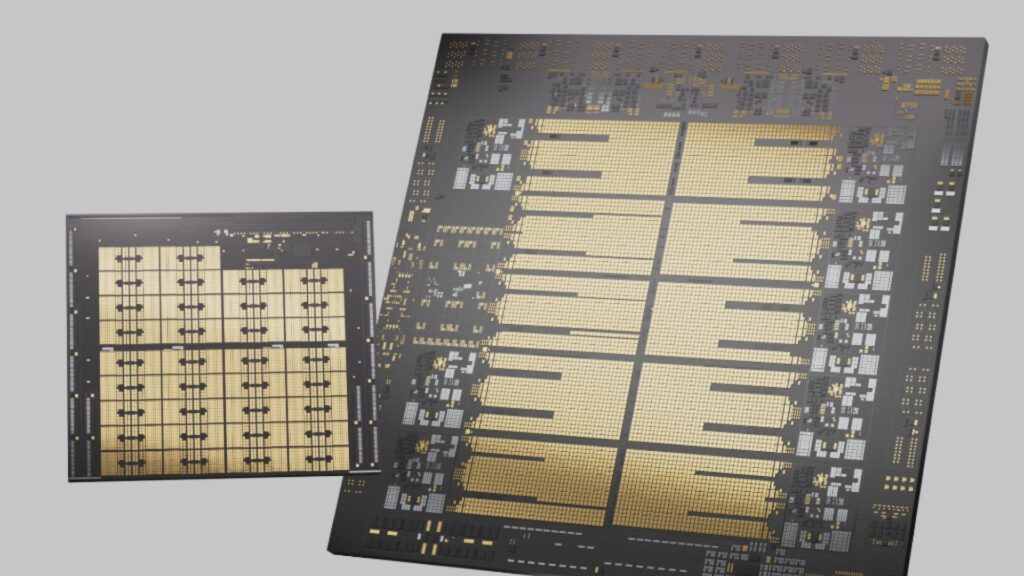

- Introduction of powerhouse processors: IBM’s unveiling of the Telum II Processor and Spyre Accelerator marks a pivotal moment in LLM news. Thus promising up to a 70% performance improvement in enterprise-scale AI applications.

- Enhancements in processing speed and security: These innovations are especially significant in news about AI, providing direct AI integration into hardware for faster processing and improved fraud detection capabilities.

- Manufactured by Samsung Foundry: Utilizing advanced 5nm node technology, the new processors underscore breakthroughs in AI news. Thus setting new benchmarks in power efficiency and scalability.

- Substantial performance improvements: Eight high-performance cores operating at 5.5GHz and a 40 percent increase in cache are major talking points in LLM news. Thus indicating significant strides in memory capacity and processing frequency.

- Integrated accelerator units for precision: Integrating accelerator units like the IBM Spyre Accelerator facilitates refined computation abilities. Thus, the continuous innovation in news about AI and its application across various sectors, including finance and daily operations, is spotlighted.

IBM’s latest invention is reshaping the landscape of AI news, heralding a new era for LLMs and generative AI with enhanced performance, security, and efficiency. Also, for more insights into this significant development in LLM news and its impact on the future of AI technologies, read this article here.

MIT champions AI collaboration for smarter solutions

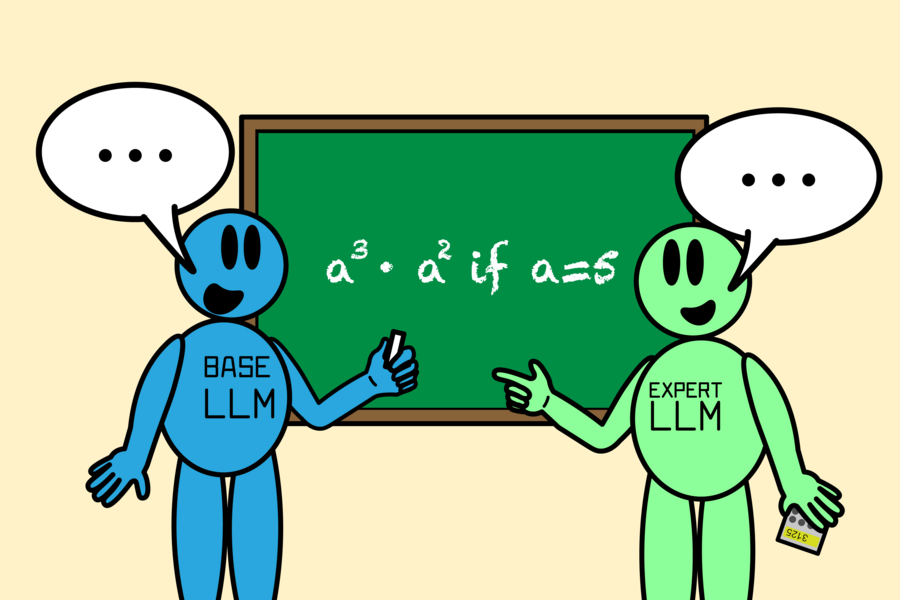

In a breakthrough LLM news story, MIT’s CSAIL unveils a revolutionary approach to enhancing LLM efficiency and accuracy.

Key highlights of this LLM news:

- Innovative algorithm, Co-LLM: Allows a general-purpose LLM to partner seamlessly with specialized models, enhancing response accuracy without the need for extensive datasets or complex formulas.

- Smarter, more efficient responses: By analyzing each response token, Co-LLM determines when to incorporate expert assistance, leading to precise answers in areas like medicine and math.

- Granular collaboration mimics human expertise: This method teaches LLMs to recognize when to seek expert advice, fostering a natural pattern of collaboration reminiscent of human interactions.

- Versatility and factual accuracy: Co-LLM showcases its adaptability and precision by integrating expertise from domain-specific models, ensuring more reliable outcomes.

- Future-focused advancements: Researchers aim to further refine Co-LLM, exploring ways to backtrack on errors and keep information up-to-date, promising even smarter AI collaborations ahead.

This LLM news is a game-changer in AI collaboration, marking significant progress in the pursuit of smarter, more efficient AI solutions. For those intrigued by the latest advances in AI news or seeking detailed insights into this innovative strategy, read this article here.

Also, read about High Peak’s guide on the top 10 AI development services

Big AI models will be commoditized, Infosys chair reveals

The landscape of LLM news is evolving rapidly as major players predict significant changes in AI technology deployment.

Key highlights of this LLM news:

- Commoditization of large language models (LLMs): According to Infosys’ chairman, Nandan Nilekani, the global proliferation of LLMs will lead to their commoditization, a critical shift highlighted in recent AI news.

- Value shift to applications: Nilekani emphasizes that while LLMs become standard, the real value will manifest in the applications developed atop these models, reshaping news about AI.

- Enterprise vs. consumer AI: Distinguishing between enterprise and consumer AI, Nilekani notes a longer integration cycle for businesses, an essential trend in AI news.

- Regional model adaptation: The adaptation of LLMs to local languages and data specifics, particularly in India, is a key development in LLM news.

- Future of AI applications: Infosys projects that the future value in AI will derive from innovative applications and technologies built over commoditized LLMs, a focal point in news about AI.

This insight into the commoditization of LLMs and the shifting focus toward applications marks a pivotal moment in LLM news. For a deeper understanding and more details on these AI developments, read this article here.

Google’s DataGemma aims to combat AI hallucinations

Google introduces DataGemma, tackling AI’s hallucination issue. The announcement of ‘DataGemma,’ is a new endeavor to enhance LLMs by reducing errors known as ‘hallucinations’ in AI-generated content.

Key highlights of this LLM news:

- Data Commons integration: DataGemma leverages information from Data Commons, a vast resource containing over 240 billion data points from credible sources including UN and WHO.

- Innovative methodologies: The project implements RIG and RAG approaches to improve factual accuracy and context understanding in AI responses.

- Promising preliminary results: Early testing shows significant improvements in LLMs’ accuracy, promising a future with less misinformation in AI applications.

- Open model availability: DataGemma is readily available to developers and researchers, fostering wider adoption and innovation.

Furthermore, to dive deeper into how DataGemma uses real-world data to refine AI applications and reduce errors, read this article here.

Abu Dhabi’s tech powerhouse G42 invests in Hindi LLM and AI infrastructure

Abu Dhabi’s technology firm G42 is making strategic moves in the AI sector, as reported in the latest LLM news. Manu Jain, G42’s India CEO, sheds light on the company’s ambitious projects. These are destined to leave a significant imprint on the AI tech news landscape.

Key highlights of this LLM news:

- Hindi Language LLM initiative: G42 has committed millions to develop a Hindi-specific LLM, eyeing a commercial launch in India within 4-5 months.

- Expanding AI infrastructure: The company has major plans for AI-focused data centers and a powerful supercomputer, indicative of an upward trend in news about AI.

- Manu Jain’s leadership: Under the guidance of Manu Jain, formerly leading Xiaomi India, G42’s investment reflects a strategic expansion in the AI market covered in LLM news.

- Fostering regional AI growth: This move promises to localize and boost AI capabilities in India, a frequent focus in AI news reports and discussions.

The anticipated deployment of Hindi LLMs by G42, alongside their investment in AI infrastructure, represents a landmark advancement in regional AI capabilities. Also, to dive deeper into this technological evolution and understand its implications in the broader scope of LLM news, click here to read the full article.

EMA and HMA set new standards for AI in regulatory decisions

In a significant move that underscores the deepening relationship between artificial intelligence and regulatory frameworks, the European Medicines Agency (EMA) and the Heads of Medicines Agencies (HMA) have unveiled a set of guiding principles.

Key highlights of this LLM news:

- Introduction of guiding principles: EMA and HMA aim to optimize the use of Large Language Models (LLM) among their staff, setting a standard for regulatory decision-making.

- Potential and challenges of LLM: Recognized for their transformative capability, LLMs bring new efficiencies but also face challenges such as data security and accuracy.

- Ethical and responsible use: The principles emphasize the ethical use of LLM, covering human dignity, the rule of law, and data protection.

- Encouraging continuous learning: Agencies are encouraged to continually learn about LLM capabilities and limitations, ensuring responsible data input.

- Promoting collaboration: Sharing experiences within the network is considered vital for reducing uncertainties and fostering a common understanding.

This advancement in LLM news highlights a significant stride towards integrating AI technologies within regulatory frameworks, reflecting broader trends in AI news across sectors. For a deeper insight into these guiding principles and their implications, read this article here.

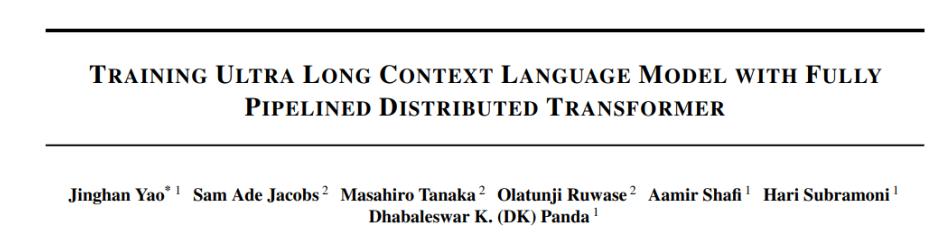

Microsoft’s breakthrough in AI: A 16x leap in processing long sequences

In the ever-evolving AI landscape, Microsoft has made headlines once again. Their latest innovation is a testament to the rapid advancements in artificial intelligence.

Key highlights of this LLM news:

- Pioneering technology: Microsoft introduces the Fully Pipelined Distributed Transformer (FPDT). It tackles the challenge of training long-context language models (LLMs) with unprecedented efficiency.

- Enhanced hardware utilization: This new system maximizes the use of modern GPU clusters. It achieves high Model FLOPs Utilization (MFU), highlighting a key development in AI news.

- Cost-effective and efficient: The FPDT enhances both hardware efficiency and cost-effectiveness. It signifies a step forward in creating more accessible AI technologies, as emphasized in recent NVIDIA news.

- Remarkable performance: Achieving a 16-fold increase in sequence length capacity on the same hardware. This surpasses current methods dramatically, marking a milestone in news about AI.

- Community impact: This advancement is set to empower the AI community. It opens new doors for exploring LLM capabilities in complex, long-context situations. This story is at the forefront of both AI news and NVIDIA news, despite being primarily about Microsoft’s innovation.

For those intrigued by the depths of Microsoft’s latest contribution to AI and its potential to redefine long-context processing efficiencies, read the full article here.

Revolutionizing collaboration with Abacus AI’s Chat LLM teams

As the world of technology forges ahead, Abacus AI’s innovative platform, Chat LLM Teams, is making headlines across LLM news by significantly advancing AI integration and collaboration:

Key highlights of this LLM news:

- Multiple AI models in one dashboard: Chat LLM Teams allows seamless switching between different AI models, enhancing productivity and creativity for various tasks. This flexibility is a breakthrough in AI news.

- Content creation across formats: Whether generating images or writing code, the platform supports diverse creative and technical endeavors, making it a highlight in AI news.

- Direct interaction with documents: Users can extract summaries or data from uploaded files quickly, streamlining information processing which is a hot topic in news about AI.

- Customization of text tone: Chat LLM Teams offers options to adjust the tone of generated texts to professional, empathetic, or humorous, depending on the user’s needs, marking a user-centric innovation in AI news.

- Affordable access to sophisticated tools: At just $10 a month, Chat LLM Teams is making powerful AI tools accessible, reshaping discussions in LLM news about AI affordability.

This functionality not only enhances individual productivity but also democratizes access to advanced technologies, noted frequently in discussions about AI news and LLM news. For a deeper dive into how Chat LLM Teams is changing the game in AI integration and collaboration, read the full story here.

Salesforce pioneers next-gen AI to supercharge sales performance

Salesforce is at the forefront once again in LLM news, showcasing innovative AI advancements. This breakthrough is particularly noteworthy in AI news, with Salesforce launching cutting-edge AI models to transform the sales landscape.

Key highlights of this LLM news:

- Salesforce AI research announces groundbreaking AI models: xGen-Sales and xLAM set a new benchmark in news about AI, empowering autonomous sales tasks and complex functions.

- xGen-Sales: A proprietary model engineered for precision in sales activities, elevating its status in AI news by offering rapid, accurate insights and automating critical sales tasks.

- xLAM introduces Large Action Models (LAMs): Moving beyond conventional LLMs, xLAM is designed to execute actions, not just content generation. This development is viral in LLM news, marking the dawn of autonomous AI agents.

- Cost-effective and high-performing AI solutions: Salesforce’s LLM news is abuzz with xLAM-1B, outshining larger models in function, speed, and accuracy, making high-performance AI accessible to more businesses.

- Open-source initiative for collaborative advancement: Salesforce aims to boost innovation in LLM news by sharing xLAM-1B with the research community, inviting collaborative advancements in AI technology.

Salesforce’s stride towards combining human expertise with autonomous AI in sales is a game-changer, revolutionizing how businesses approach sales tasks. For a more comprehensive understanding of how Salesforce is reshaping the landscape of AI in sales, click here to read more.

“Reflection 70B”: A leap forward in solving LLM hallucinations

The AI technology space witnessed a groundbreaking innovation with the launch of Reflection 70B, tackling one of the most persistent issues – LLM hallucinations.

Key highlights of this LLM news:

- Innovative “Reflection-Tuning” technique: Developed to enable LLMs to identify and correct their mistakes before finalizing an answer. This method stands as a significant advancement in AI news.

- Competing with giants: Reflection Llama-3.1 70B, based on Meta’s Llama 3.1, challenges top closed-source models like Anthropic’s Claude 3.5 Sonnet and OpenAI’s GPT-4, making waves in the news about AI.

- Reducing LLM hallucinations: Reflection-tuning addresses the common issue of LLMs generating inaccurate outputs by allowing them to analyze and learn from their responses. A focal point in recent LLM news.

- Self-improvement capability: By iteratively assessing its own outputs, Reflection 70B aims to become more self-aware and enhance its performance continuously. Thus, it is a notable topic in AI news.

- Accessibility and utility: Available as an open-source model, Reflection 70B is positioned as a versatile tool for various use-cases, enriching discussions in the realm of news about AI.

Reflection 70B’s approach to reducing hallucinations and improving self-awareness in AI models marks a pivotal development in LLM news. Also, for more detailed insights on how this technology aims to redefine AI’s accuracy and reliability, read the full article here.

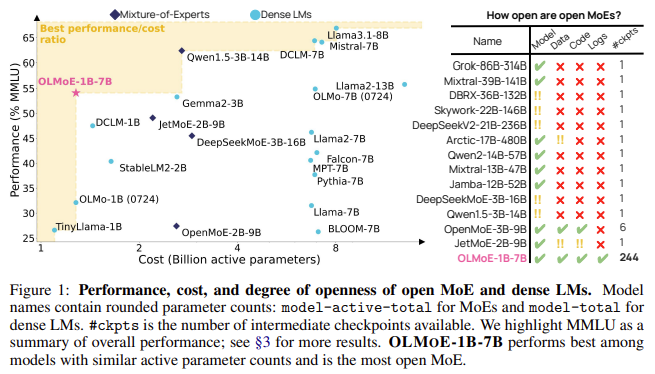

Revolutionary open-sourced LLM launched: A game changer in AI development

The landscape of LLM news is buzzing with the latest groundbreaking advancements. Researchers have just unveiled an innovative model that is setting a new benchmark in the AI news world.

Key highlights of this LLM news:

- Introducing OLMoE-1B-7B models: The news about AI is abuzz with the introduction of the OLMoE-1B-7B and OLMoE-1B-7B-INSTRUCT. Also, these models represent a major leap in language model efficiency and performance.

- Sparse architecture for efficiency: The cornerstone of these new models is their use of a sparse architecture. Also, it activates only a subset of parameters or “experts” for each input, dramatically reducing computational demands. This approach is celebrated in the latest LLM news as a significant innovation.

- Remarkable parameter optimization: With a total of 7 billion parameters, of which only 1 billion are active at any time, OLMoE models optimize computational efficiency without sacrificing performance. Also, this has garnered considerable attention in AI news circles.

- Competitive performance benchmarks: In head-to-head comparisons with much larger models, OLMoE-1B-7B shows unparalleled efficiency and effectiveness. Also, this achievement is a hot topic in news about AI and LLM news.

- Open-source advantage: By making these models fully open-sourced, the developers aim to democratize access to high-performance language models. Also, this move is widely applauded in the AI news landscape for fostering innovation and experimentation.

This latest development is a testament to the rapidly evolving field of AI. Also, it marks a significant milestone in making cutting-edge LLM technology more accessible and efficient. Furthermore, for avid followers seeking to understand more about this significant progression in AI, read this article here.

Latest LLM news: DeepMind’s GenRM revolutionizes LLM verification

LLMsoften encounter difficulties in maintaining accuracy, particularly prone to generating errors in both facts and logic when engaging with complex reasoning activities.

Key highlights of this LLM news:

- GenRM approach: DeepMind’s new model, GenRM, uses the generative capabilities of LLMs to self-verify outputs. Also, this marks a significant shift from traditional verification models, which separate generation and evaluation phases.

- Chain-of-Thought reasoning: Incorporates a chain-of-thought (CoT) reasoning process to enhance accuracy in LLM outputs by encouraging the models to ‘think’ through problems step-by-step.

- Performance boost: Tests demonstrate that GenRM consistently outperforms traditional methods on reasoning tasks, with notable improvements in accuracy, making headlines in LLM news.

- Flexible and scalable: GenRM adapts well to increasing dataset sizes, offering LLM application developers the ability to balance between computational costs and accuracy.

- Future applications: The potential for incorporating GenRM into various facets of AI, including reinforcement learning and code execution, highlights its relevance in future AI news.

For more detailed insights on this groundbreaking development in news about AI, read the full article here.

LLM news: Meta’s battle against weaponized AI

The latest in LLM news focuses on Meta’s proactive measures against the growing threat of weaponized large language models (LLMs). These AI tools have become more sophisticated, creating an urgent need for improved cybersecurity tactics.

Key highlights of this LLM news:

- Meta’s CyberSecEval 3 framework: Meta introduces CyberSecEval 3, a comprehensive benchmarking suite designed to evaluate AI models for cybersecurity risks and implement new defenses against threats. This approach is gaining traction in LLM news.

- Highlighting vulnerability risks: The team tested Llama 3 across key cybersecurity risks. They uncovered critical vulnerabilities in automated phishing and offensive operations, which is significant news about AI.

- Strategies for combat: Meta recommends deploying advanced guardrails like LlamaGuard 3, enhancing human oversight, and continuous AI security training. These strategies are essential elements in the current LLM news.

- Need for a multi-layered approach: Combining traditional security measures with AI-driven insights can offer stronger defense lines against diverse threats. Such integrations are at the forefront of AI news.

- Recommendations for enterprises: Meta’s strategies, including multi-layered security approaches, are crucial for any organization leveraging LLMs in production. This guidance is a key aspect of AI news.

The developments in combating weaponized LLMs are pivotal in LLM news. They signify a new chapter in cybersecurity practices within AI news. For those interested in deeper insights into these strategies and their implications on the broader cyber defense landscape, read this article here.

LLM servers leave sensitive data vulnerable online

In recent LLM news, a significant security risk has been brought to light, showcasing the challenges of AI development.

Key highlights of this LLM news are:

- Unsecured LLM builders: Hundreds of Flowise LLM builder servers are exposed due to inadequate security practices, leading to potential data breaches.

- Exploitable vulnerabilities: Researchers found a high-severity vulnerability, CVE-2024-31621, in Flowise. Thus allowing unauthorized access to sensitive information.

- Leaking vector databases: Roughly 30 vector databases are unprotected, storing sensitive AI app data without proper authentication.

- Compromised sensitive data: Exposed databases contain private emails, financial details, and personal information, posing serious privacy concerns.

- Urgent need for secure AI tools: The news about AI underscores the necessity for stringent security around AI services and the prompt application of software updates.

For organizations racing to integrate AI into operations, this LLM news serves as a stark warning about the importance of securing AI tools against online exposure. Also, to learn more about the latest developments in AI development and how to protect against these vulnerabilities read the full article here.

India advances in legal AI with Lexlegis.AI

India has made a significant leap in the LLM movement, thanks to Lexlegis.AI.

Key highlights of this LLM news are:

- Lexlegis.AI Launches Legal-Specific LLM: Mumbai’s innovation, trained on over 10 million documents. A milestone in AI development.

- 25 Years of Legal Expertise: The company’s deep roots in legal data are fueling this advancement. A testament to their commitment to AI development.

- Focusing on Indian Jurisdiction: This LLM, unique to India’s legal texts, contributes significantly to the legal AI landscape. Highlighting focused news about AI.

- Going Global: Lexlegis.AI plans to take its product international next year. It’s a big step in AI development news.

- Support for the Judiciary: The genAI system could ease India’s case backlog. Showcasing the practical benefits addressed in LLM news.

- Beyond Research: The AI’s capabilities include document analysis and drafting aid. Such applications are at the forefront of news about AI.

Also, for anyone keen on exploring how India is setting a new precedent in legal-specific AI tools, this development is groundbreaking. To delve deeper into this landmark news in AI development, please read the full story here.

Perplexity redefines AI search with integrated advertising by 2024

The AI search landscape is set for a transformation as Perplexity announces plans to integrate ads into search results.

Key highlights of this LLM news are:

- Innovative Advertising: Perplexity will integrate ads directly into AI search results. This approach facilitates greater commercial interaction.

- High User Engagement: The company reports 230 million U.S. searches per month. Their strategy promises significant ROI for advertisers with a CPM over $50.

- Relevant Ad Placement: Perplexity utilizes advanced algorithms. These ensure ads are relevant to user searches and preferences.

- Diverse Advertising Domains: At launch, ads will span across twelve core industries, including technology, health, and entertainment.

- Non-Intrusive Strategy: Promotional materials will be seamlessly integrated, enhancing user experience without being obtrusive.

- Broadening Horizons: Perplexity aims to expand into sectors like education and food and beverage based on market responses and trends.

For those interested in the intersection of AI development and commercial strategy, this news about AI marks a significant evolution. To discover more about Perplexity’s groundbreaking advertising approach in the field of AI search, you can read the full article here.

Clio: the next-gen AI-powered DevOps assistant

Breaking ground in AI development, Acorn has unveiled Clio. This LLM-powered assistant is redefining DevOps with heightened efficiency and intuitive management solutions.

Key highlights of this LLM news are:

- Multifaceted DevOps Support: Clio offers comprehensive DevOps functionality. It covers cloud resource management across major platforms, including AWS and GCP.

- Advanced Kubernetes and Docker Operations: It simplifies Kubernetes and Docker workflows. This streamlines application deployment and container management.

- GitHub and Secret Management: Enhanced GitHub integration and secret management is now more secure with Clio.

- Local Execution Model: Clio champions privacy with local executions and no server-side data retention.

- Recognition from DevOps Leaders: Leading figures like John Willis praise Clio for its natural language capabilities and its seamless integration into current workflows.

- Flexible and Context-Sensitive: Highlighting the ability to adapt commands based on project contexts. This is the future of AI in DevOps.

- Room for Growth: Early users recognize Clio’s potential despite noting areas for improvement in user experience.

The progression of LLM news is rapid, and with Clio’s introduction, the future of AI in DevOps looks more promising than ever. To further explore the impacts of this AI development and deepen your understanding of the latest LLM news, please click here to read the full article.

Meta embarks on AI development with a self-taught LLM evaluator

In a significant LLM news announcement, Meta’s AI research arm is making strides with a novel project.

Key highlights of this LLM news are:

- Self-teaching evaluator: Meta proposes a self-taught evaluator for LLMs. Also, it is designed to minimize reliance on human resources and time in LLM development.

- Iterative synthetic data use: The Self-Taught Evaluator would enable an LLM to generate its own synthetic data. Furthermore, this can be used for iterative self-improvement.

- LLM-as-a-judge: The new system employs LLMs as evaluators. They were trained using synthetic data instead of costly human-annotated data.

- Challenges addressed: The limitations of current models in complex tasks are acknowledged. These include coding and mathematics.

- Empirical results: Meta’s method improved Llama3-70B-Instruct’s RewardBench score significantly. It went from 75.4 to 88.3 without labeled preference data.

- Impressive comparisons: The Self-Taught Evaluator performed on par with top-tier models. Also, this includes GPT-4 and the best reward models trained with human-labeled data.

The landscape of AI development and LLM news continues to evolve. Also, with Meta’s latest innovation, the implications for future AI capabilities seem boundless. Furthermore, to dive deeper into the intricacies of this news about AI, please read the full article here.

Also read: What is a step-by-step guide for AI application development?

Microsoft’s Phi 3.5 LLM leads in AI development, surpassing Meta and Google

The tech world has buzzed with exciting LLM news as Microsoft unveils its Phi 3.5 models, setting new benchmarks in AI development.

Key highlights of this LLM news are:

- Industry leadership: Microsoft’s Phi 3.5 LLM models have outperformed rivals from Meta and Google, marking a notable achievement in AI development.

- Comprehensive upgrades: Released on the Hugging Face platform under the MIT License, the Phi 3.5 includes models tailored for various applications. Thus showcasing Microsoft’s commitment to innovating in AI development.

- High-quality training data: The models leverage synthetic data as well as filtered publicly available documents, focusing on highly reasoning-dense scenarios.

- Outstanding performance: Despite having fewer parameters, Phi 3.5 models excelled in benchmarks for reasoning and multilingual capabilities, highlighting Microsoft’s efficient use of resources in AI development.

- Core innovations: The Phi 3.5 includes models specifically designed for constrained environments, highlighting Microsoft’s focus on versatile applications in AI development.

- Limitations acknowledged: Microsoft transparently addresses the factual knowledge limitations of its models. Thus suggesting augmentative strategies for improvement.

In a field that’s rapidly evolving, this LLM news positions Microsoft at the forefront of AI development. To learn more about Microsoft’s pioneering efforts and how they stack up against competitors in this exciting space, click to read the full article.

LLM news: Revolutionizing AIOps with innovative applications

The landscape of AI development and observability is witnessing a transformation as Large Language Models (LLMs) pioneer substantial progress in the industry.

Key highlights of this LLM news are:

- Forefront of innovation: LLMs are increasingly becoming central in AI development. Thus altering the everyday interactions between the general populace and artificial intelligence.

- Pragmatic solutions: The shift from mere discovery to real-world application in AI development is underscored by LLMs’ capacity. Thus, it helps to address practical problems with feasible solutions.

- Senser’s AIOps platform: Demonstrating a tangible application within IT operations, Senser’s platform utilizes LLMs to streamline and improve database query management.

- Enhanced communication interface: Surpassing traditional voice assistants, LLMs offer a communication method that is both more natural and accurate. Hence sidestepping common issues like lack of context and precision.

- Innovative two-layered solution: Senser’s approach showcases the intricate and inventive use of LLMs necessary for efficient data interaction and tailored queries.

This pivotal moment in AI development and AIOps application indicates a bright future, inching closer to realizing Alan Turing’s vision. Thus, to dive deeper into how LLMs are revolutionizing AIOps and establishing new benchmarks in AI development, read this article here.

Also read: Top 10 AI development companies in 2024

LLM news from Anthropic: Legal controversies shadowing AI development

The domain of AI development is currently tangled in legal intricacies as Large Language Models (LLMs) find themselves at the center of copyright disputes.

Key highlights of this LLM news are:

- Escalating legal battles: Anthropic, known for its LLM prowess, is facing a lawsuit over its training practices for the AI model Claude, marking a contentious moment in AI development.

- Copyright claims: Allegations have been made by three writers who assert that their work was utilized without consent. Thus highlighting ongoing copyright concerns in AI development.

- Recurring legal issues: This legal challenge is not Anthropic’s first; it follows a similar lawsuit by music publishers and underscores a pattern in the AI industry’s legal struggles.

- Industry-wide impact: The lawsuit against Anthropic echoes similar legal concerns faced by other AI giants like OpenAI and Meta, stressing the pervasive legal challenges in news about AI.

- Focus on ethical training: This case brings to light the crucial need for ethically sound practices in the training of LLMs, a key concern that could redefine the future of AI strategies and implementations.

This critical phase in the AI development landscape, riddled with legal challenges, might potentially reshape future practices and ethical frameworks in the industry. LLMs continue to make news about AI, but now more in the courtroom than in the lab.

For a more detailed exploration of how legal controversies are influencing AI development and shaping the news about AI, read this article here.

Army CIO laser-focused on LLMaaS for AI development

In recent LLM news, the U.S. Army is taking significant strides in AI development with the use of LLMaaS (Large Language Models as a Service).

Key highlights of this LLM news are:

- Strategic LLMaaS implementation: The U.S. Army is strategically incorporating Large Language Models as a Service (LLMaas) into its operations, making it a vanguard in AI development within military sectors.

- Diverse AI experimental projects: The Army currently has nine different LLM initiatives, each serving to explore the vast capabilities of AI in enhancing military efficacy.

- Enhancement of soldier and aviation safety: Prioritizing the welfare of soldiers and the security of aviation operations, the Army’s LLM experiments showcase the practical benefits and applications of AI technology.

- Release of generative AI guidance: The Army has introduced its initial set of guidelines on generative AI use, emphasizing its serious investment in the responsible and effective integration of LLMs.

- Public-private partnerships: By partnering with small businesses, the Army is scouting for innovative AI development opportunities and fostering a collaborative environment for technological advancement.

- Optimization of legal workflows: In an effort to streamline legal and administrative procedures, the Army is leveraging LLMs to fast-track policy creation and simplify complex bureaucratic tasks.

This focused endeavor in LLM news reflects the Army’s dedication to adopting AI development as a cornerstone of modern military operations. As AI continues to evolve, news about AI in defense sectors proves the potency and versatility of LLM technology.

For those seeking a deeper understanding of the Army’s innovative journey in LLM adoption and its potential to reshape AI development, read the full article here.

Stay updated with the latest LLM news on AI development with High Peak

For those inspired to integrate similar AI capabilities into their systems or explore tailored AI solutions, be sure to check out our news section for the latest insights and updates.

Book a consultation with our experts today and unlock the potential of cutting-edge AI technologies tailored specifically to your needs.